|

【揭秘】价值超2000万/机柜!英伟达最强AI服务器内部细节详解!

可鉴智库 2024-06-03 20:01 广东

关注可鉴智库,学习更多服务器产业干货

2024年3月,英伟达发布了迄今为止最强大的 DGX 服务器。

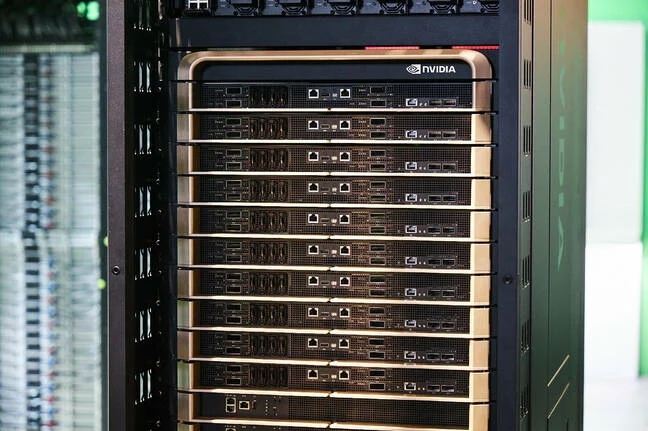

据了解,DGX GB200 系统机柜分三大类,分别是 DGX NVL72、NVL32、HGX B200 ,其中 DGX NVL72 是该系列中单价最高、算力最强的 AI 系统,内置 72 颗 B200 GPU 及 36 颗 Grace CPU,配备 9 台交换器,整机设计由 NVIDIA 主导且不能修改,但 ODM 厂商可以自己设计 I / O 及以太网连接系统。

DGX GB200 将于今年下半年开始量产,预计 2025 年产量最高可达 4 万台。价格方面,NVL72 每座机柜单价可达 300 万美元(约 2166 万元人民币!)

那么,这款AI服务器内部有哪些关键细节?

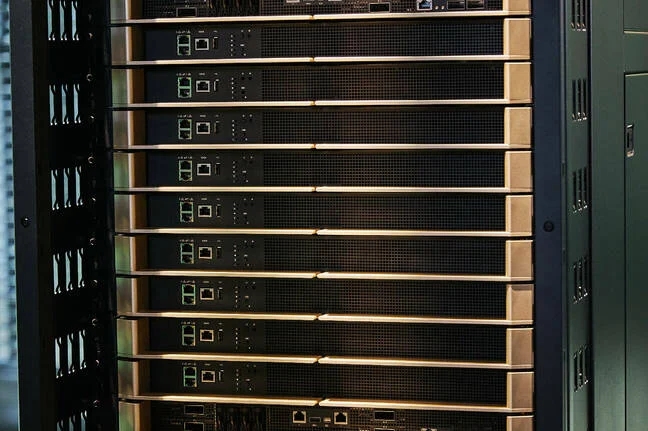

▲Nvidia的DGX GB200 NVL72是一个机架级系统,使用NVLink将72个Blackwell加速器网格化为一个大GPU

该系统被称为 DGX GB200 NVL72,是 Nvidia在11月份展示的基于 Grace-Hopper 超级芯片的机架系统的演变。然而,这个处理器的 GPU 数量是其两倍多。

1. 计算堆栈

虽然 1.36 公吨(3,000 磅)机架系统作为一个大型 GPU 进行销售,但它由 18 个 1U 计算节点组装而成,每个节点都配备了两个 Nvidia 的 2,700W Grace-Blackwell 超级芯片 (GB200)。

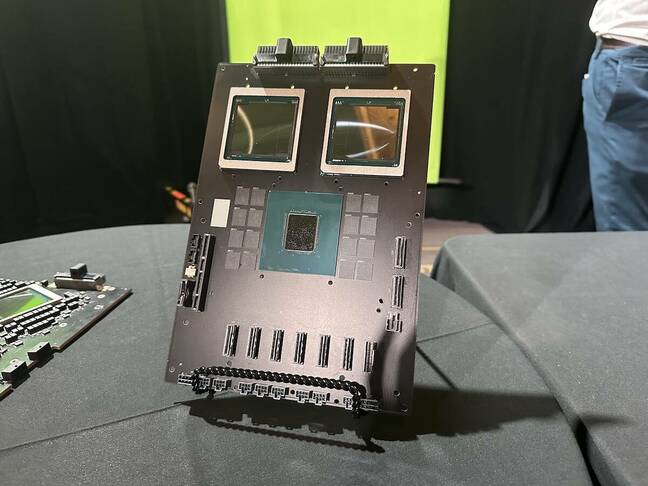

▲这里我们看到两个GB200超级芯片,在1U液冷机箱中减少了散热器和冷板

大量部件使用 Nvidia 的 900GBps NVLink-C2C 互连将 72 核 Grace CPU 与一对顶级规格的 Blackwell GPU 结合在一起。 总体而言,每个超级芯片均配备 864GB 内存(480GB LPDDR5x 和 384GB HBM3e),根据 Nvidia 的说法,在FP4 精度下可以推动 40 petaFLOPS 的计算能力。这意味着每个计算节点能够产生 80 petaFLOPS 的人工智能计算,整个机架可以执行 1.44 exaFLOPS 的超低精度浮点数学运算。

▲两个B200 GPU与Grace CPU结合就成为GB200超级芯片,通过900GB/s的超低功耗NVLink芯片间互连技术连接在一起

▲英伟达的Grace Blackwell超级芯片,简称GB200,结合了一个CPU和一对1200W GPU

系统前端是四个 InfiniBand 网络接口卡(请注意机箱面板左侧和中心的四个 QSFP-DD 壳体),它们构成了计算网络。该系统还配备了 BlueField-3 DPU,我们被告知它负责处理与存储网络的通信。

除了几个管理端口之外,该机箱还具有四个小型 NVMe。

▲18个这样的计算节点共有36CPU+72GPU,组成更大的“虚拟GPU”

▲NVL72的18个计算节点标配四个 InfiniBand 网络接口卡和一个BlueField-3 DPU

凭借两个GB200 超级芯片和五个网络接口卡,我们估计每个节点的功耗为 5.4kW- 5.7kW。绝大多数热量将通过直接芯片 (DTC) 液体冷却方式带走。Nvidia展示的 DGX 系统没有冷板,但我们确实看到了合作伙伴供应商的几个原型系统,例如联想的这个系统。

▲虽然英伟达展出的GB200系统没有安装冷却板,但联想的这款原型展示了它在实际生产中的样子

然而,与我们从 HPE Cray系列中以HPC为中心的节点或联想的 Neptune 系列中看到的以液体冷却所有设备不同,Nvidia 选择使用传统的 40mm 风扇来冷却网络接口卡和系统存储等低功耗外围设备。

2. 将它们缝合在一起

在主题演讲中,黄仁勋将 NVL72 描述为一个大型 GPU。这是因为所有 18 个超密集计算节点都通过位于机架中间的九个 NVLink 交换机堆栈相互连接。

▲积木式的搭建

▲在NVL72的计算节点之间是一个由九个NVLink交换机组成的堆栈,这些交换机为系统72个GPU中的每个提供1.8 TBps的双向带宽

Nvidia 的 HGX 节点也使用了相同的技术来使其 8 个 GPU 发挥作用。但是,NVL72 中的 NVLink 开关并不是像下面所示的 Blackwell HGX 那样将 NVLink 开关烘烤到载板上,而是一个独立的设备。

▲NVLink交换机集成到Nvidia的SXM载板中,如同上图展示的Blackwell HGX板

这些交换机设备内部有一对 Nvidia 的 NVLink 7.2T ASIC,总共提供 144 100 GBps 链路。每个机架有 9 个 NVLink 交换机,可为机架中 72 个 GPU 中的每个 GPU 提供 1.8 TBps(18 个链路)的双向带宽。

▲此处显示的是每个交换机上有两个第5代NVLink高速连接系统

NVLink 交换机和计算底座均插入盲插背板,并具有超过 2 英里(3.2 公里)的铜缆布线。透过机架的背面,您可以隐约看到一大束电缆,它们负责将 GPU 连接在一起,以便它们可以作为一个整体运行。

▲如果你仔细观察,可以看到形成机架NVLink背板的巨大电缆束

坚持使用铜缆而不是光纤的决定似乎是一个奇怪的选择,特别是考虑到我们正在讨论的带宽量,但显然支持光学所需的所有重定时器和收发器都会在系统已经巨大的基础上,再增加 20kW电力消耗。

这可以解释为什么 NVLink 交换机底座位于两个计算组之间,因为这样做可以将电缆长度保持在最低限度。

3. 电源、冷却和管理

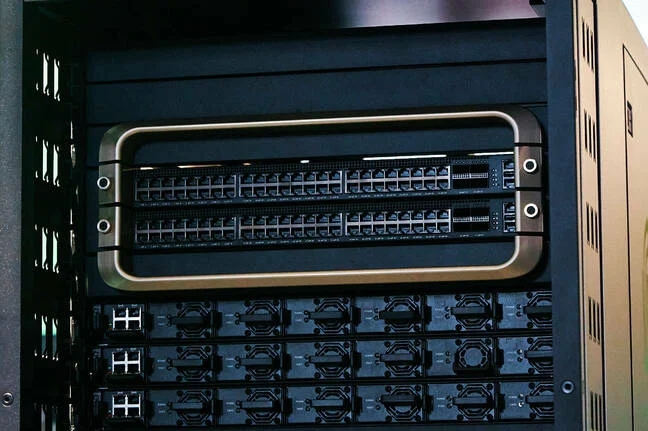

在机架的最顶部,我们发现了几个 52 端口 Spectrum 交换机 — 48端口千兆 RJ45接口和4个QSFP28 100Gbps 聚合端口。据我们所知,这些交换机用于管理和传输来自构成系统的各个计算节点、NVLink 交换机底座和电源架的流式遥测。

▲在NVL72的顶部,我们发现了几个交换机和六个电源架中的三个

这些交换机的正下方是从 NVL72 前面可见的六个电源架中的第一个 - 三个位于机架顶部,三个位于底部。我们对它们了解不多,只知道它们负责为 120kW 机架提供电力。

根据我们的估计,六个 415V、60A 电源就足以满足这一要求。不过,Nvidia 或其硬件合作伙伴可能已经在设计中内置了一定程度的冗余。这让我们相信它们的运行电流可能超过 60A。

不管他们是怎么做的,电力都是由沿着机架背面延伸的超大规模直流母线提供的。如果仔细观察,您可以看到母线沿着机架中间延伸。

▲根据黄仁勋的说法,冷却液被设计为以每秒2升的速度泵送通过机架

当然,冷却 120kW 的计算并不是小事。但随着芯片变得越来越热和计算需求不断增长,我们看到越来越多的数据中心服务商(包括 Digital Realty 和 Equinix)扩大了对高密度 HPC 和 AI 部署的支持。

就 Nvidia 的 NVL72 而言,计算交换机和 NVLink 交换机均采用液体冷却。据黄仁勋介绍,冷却剂以每秒 2 升的速度进入 25 摄氏度的机架,离开时温度升高 20 度。

4. 横向扩展

如果 DGX GB200 NVL72 服务器1.44 exaFLOPS的计算能力还不够,那么可以将其中的 8 个网络连接在一起,形成一个具有 576 个 GPU 的大型 DGX Superpod。

▲再用Quantum InfiniBand交换机连接,配合散热系统组成新一代DGX SuperPod集群。DGX GB200 SuperPod采用新型高效液冷机架规模架构,标准配置可在FP4精度下提供11.5 Exaflops算力和240TB高速内存。

▲八个DGX NVL72机架可以串在一起,形成英伟达的液冷DGX GB200 Superpod

如果需要更多计算来支持大型训练工作负载,则可以添加额外的 Superpod 以进一步扩展系统。这正是 Amazon Web Services 通过Project Ceiba所做的事情。

这款 AI 超级计算机最初于 11 月宣布,现在使用 Nvidia 的 DGX GB200 NVL72 作为模板。据报道,完成后该机器将拥有 20,736 个 GB200 加速器。然而,该系统的独特之处在于,Ceiba 将使用 AWS 自主开发的 Elastic Fabric Adapter (EFA) 网络,而不是 Nvidia 的 InfiniBand 或以太网套件。

英伟达表示,其 Blackwell 部件,包括机架规模系统,将于今年晚些时候开始投放市场。

One rack. 120kW of compute. Taking a closer look at Nvidia's DGX GB200 NVL72 beast

1.44 exaFLOPs of FP4, 13.5 TB of HBM3e, 2 miles of NVLink cables, in one liquid cooled unit

Thu 21 Mar 2024 // 13:00 UTC

GTC Nvidia revealed its most powerful DGX server to date on Monday. The 120kW rack scale system uses NVLink to stitch together 72 of its new Blackwell accelerators into what's essentially one big GPU capable of more than 1.4 exaFLOPS performance — at FP4 precision anyway.

At GTC this week, we got a chance to take a closer look at the rack scale system, which Nvidia claims can support large training workloads as well as inference on models up to 27 trillion parameters — not that there are any models that big just yet.

Nvidia's DGX GB200 NVL72 is a rack scale system that uses NVLink to mesh 72 Blackwell accelerators into one big GPU.

Nvidia's DGX GB200 NVL72 is a rack scale system that uses NVLink to mesh 72 Blackwell accelerators into one big GPU (click to enlarge)

Dubbed the DGX GB200 NVL72, the system is an evolution of the Grace-Hopper Superchip based rack systems Nvidia showed off back in November. However, this one is packing more than twice the GPUs.

Stacks of compute

While the 1.36 metric ton (3,000 lb) rack system is marketed as one big GPU, it's assembled from 18 1U compute nodes, each of which is equipped with two of Nvidia's 2,700W Grace-Blackwell Superchips (GB200).

Here we see two GB200 Superchips, minus heatspreaders and cold plates in a 1U liquid cooled chassis

Here we see two GB200 Superchips, minus heatspreaders and cold plates in a 1U liquid cooled chassis (click to enlarge)

You can find more detail on the GB200 in our launch day coverage, but in a nutshell, the massive parts use Nvidia's 900GBps NVLink-C2C interconnect to mesh together a 72-core Grace CPU with a pair of top-specced Blackwell GPUs.

In total, each Superchip comes equipped with 864GB of memory — 480GB of LPDDR5x and 384GB of HBM3e — and according to Nvidia, can push 40 petaFLOPS of sparse FP4 performance. This means each compute node is capable of producing 80 petaFLOPS of AI compute and the entire rack can do 1.44 exaFLOPS of super-low-precision floating point mathematics.

Nvidia's Grace-Blackwell Superchip, or GB200 for short, combines a 72 Arm-core CPU with a pair of 1,200W GPUs.

Nvidia's Grace-Blackwell Superchip, or GB200 for short, combines a 72 Arm-core CPU with a pair of 1,200W GPUs (click to enlarge)

At the front of the system are the four InfiniBand NICs — note the four QSFP-DD cages on the left and center of the chassis' faceplate — which form the compute network. The systems are also equipped with a BlueField-3 DPU, which we're told is responsible for handling communications with the storage network.

In addition to a couple of management ports, the chassis also features four small form-factor NVMe storage caddies.

The NVL72's 18 compute nodes come as standard with four Connect-X InfiniBand NICs and a BlueField-3 DPU.

The NVL72's 18 compute nodes come as standard with four Connect-X InfiniBand NICs and a BlueField-3 DPU (click to enlarge)

With two GB200 Superchips and five NICs, we estimate each node is capable of consuming between 5.4kW and 5.7kW a piece. The vast majority of this heat will be carried away by direct-to-chip (DTC) liquid cooling. The DGX systems Nvidia showed off at GTC didn't have cold plates, but we did get a look at a couple prototype systems from partner vendors, like this one from Lenovo.

While the GB200 systems Nvidia had on display didn't have coldplates installed, this Lenovo prototype shows what it might look like in production

While the GB200 systems Nvidia had on display didn't have coldplates installed, this Lenovo prototype shows what it might look like in production (click to enlarge)

However, unlike some HPC-centric nodes we've seen from HPE Cray or Lenovo's Neptune line which liquid cool everything, Nvidia has opted to cool low-power peripherals like NICs and system storage using conventional 40mm fans.

Stitching it all together

During his keynote, CEO and leather jacket aficionado Jensen Huang described the NVL72 as one big GPU. That's because all 18 of those super dense compute nodes are connected to one another by a stack of nine NVLink switches situated smack dab in the middle of the rack.

In between the NVL72's compute nodes are a stack of nine NVLink switches, which provide 1.8 TBps of bidirectional bandwidth each of the systems 72 GPUs.

In between the NVL72's compute nodes are a stack of nine NVLink switches, which provide 1.8 TBps of bidirectional bandwidth each of the systems 72 GPUs (click to enlarge)

This is the same tech that Nvidia's HGX nodes have used to make its eight GPUs behave as one. But rather than baking the NVLink switch into the carrier board, like in the Blackwell HGX shown below, in the NVL72, it's a standalone appliance.

The NVLink switch has traditionally been integrated into Nvidia's SXM carrier boards, like the Blackwell HGX board.

The NVLink switch has traditionally been integrated into Nvidia's SXM carrier boards, like the Blackwell HGX board shown here (click to enlarge)

Inside of these switch appliances are a pair of Nvidia's NVLink 7.2T ASICs, which provide a total of 144 100 GBps links. With nine NVLink switches per rack, that works out to 1.8 TBps — 18 links — of bidirectional bandwidth to each of the 72 GPUs in the rack.

Shown here are the two 5th-gen NVLink ASICS found in each of the NVL72's nine switch sleds.

Shown here are the two 5th-gen NVLink ASICS found in each of the NVL72's nine switch sleds (click to enlarge)

Both the NVLink switch and compute sleds slot into a blind mate backplane with more than 2 miles (3.2 km) of copper cabling. Peering through the back of the rack, you can vaguely make out the massive bundle of cables responsible for meshing the GPUs together so they can function as one.

If you look closesly, you can see the massive bundle of cables that form the rack's NVLink backplane.

If you look closesly, you can see the massive bundle of cables that form the rack's NVLink backplane (click to enlarge)

The decision to stick with copper cabling over optical might seem like an odd choice, especially considering the amount of bandwidth we're talking about, but apparently all of the retimers and transceivers necessary to support optics would have added another 20kW to the system's already prodigious power draw.

This may explain why the NVLink switch sleds are situated in between the two banks of compute as doing so would keep cable lengths to a minimum.

Nvidia: Why write code when you can string together a couple chat bots?

Nvidia turns up the AI heat with 1,200W Blackwell GPUs

How to run an LLM on your PC, not in the cloud, in less than 10 minutes

Oxide reimagines private cloud as... a 2,500-pound blade server?

Power, cooling, and management

At the very top of the rack we find a couple of 52 port Spectrum switches — 48 gigabit RJ45 and four QSFP28 100Gbps aggregation ports. From what we can tell, these switches are used for management and streaming telemetry from the various compute nodes, NVLink switch sleds, and power shelves that make up the system.

At the top of the NVL72, we find a couple of switches and three of six powershelves.

At the top of the NVL72, we find a couple of switches and three of six powershelves (click to enlarge)

Just below these switches are the the first of six power shelves visible from the front of the NVL72 — three toward the top of the rack and three at the bottom. We don't know much about them other than they're responsible for keeping the 120kW rack fed with power.

Based on our estimates, six 415V, 60A PSUs would be enough to cover that. Though, presumably Nvidia or its hardware partners have built in some level of redundancy into the design. That leads us to believe these might be operating at more than 60A. We've asked Nvidia for more details on the power shelves; we'll let you know what we find out.

However they're doing it, the power is delivered by a hyperscale-style DC bus bar that runs down the back of the rack. If you look closely, you can just make out the bus bar running down the middle of the rack.

According to CEO Jensen Huang, coolant is designed to be pumped through the rack at 2 liters per second.

According to CEO Jensen Huang, coolant is designed to be pumped through the rack at 2 liters per second (click to enlarge)

Of course, cooling 120kW of compute isn't exactly trivial. But with chips getting hotter and compute demands growing, we've seen an increasing number of bit barns, including Digital Realty and Equinix, expand support for high-density HPC and AI deployments.

In the case of Nvidia's NVL72, both the compute and NVLink switches are liquid cooled. According to Huang, coolant enters the rack at 25C at two liters per second and exits 20 degrees warmer.

Scaling out

If the DGX GB200 NVL72's 13.5 TB of HBM3e and 1.44 exaFLOPS of sparse FP4 ain't cutting it, eight of them can be networked together to form one big DGX Superpod with 576 GPUs.

Eight DGX NVL72 racks can be strung together to form Nvidia's liquid cooled DGX GB200 Superpod.

Eight DGX NVL72 racks can be strung together to form Nvidia's liquid cooled DGX GB200 Superpod (click to enlarge)

And if you need even more compute to support large training workloads, additional Superpods can be added to further scale out the system. This is exactly what Amazon Web Services is doing with Project Ceiba. Initially announced in November, the AI supercomputer is now using Nvidia's DGX GB200 NVL72 as a template. When complete, the machine will reportedly have 20,736 GB200 accelerators. However, that system is unique in that Ceiba will use AWS' homegrown Elastic Fabric Adapter (EFA) networking, rather than Nvidia's InfiniBand or Ethernet kit.

Nvidia says its Blackwell parts, including its rack scale systems, should start hitting the market later this year.

关于我们

北京汉深流体技术有限公司是丹佛斯中国数据中心签约代理商。产品包括FD83全流量自锁球阀接头,UQD系列液冷快速接头、EHW194 EPDM液冷软管、电磁阀、压力和温度传感器及Manifold的生产和集成服务。在国家数字经济、东数西算、双碳、新基建战略的交汇点,公司聚焦组建高素质、经验丰富的液冷工程师团队,为客户提供卓越的工程设计和强大的客户服务。

公司产品涵盖:丹佛斯液冷流体连接器、EPDM软管、电磁阀、压力和温度传感器及Manifold。

未来公司发展规划:数据中心液冷基础设施解决方案厂家,具备冷量分配单元(CDU)、二次侧管路(SFN)和Manifold的专业研发设计制造能力。

- 针对机架式服务器中Manifold/节点、CDU/主回路等应用场景,提供不同口径及锁紧方式的手动和全自动快速连接器。

- 针对高可用和高密度要求的刀片式机架,可提供带浮动、自动校正不对中误差的盲插连接器。以实现狭小空间的精准对接。

- 基于OCP标准全新打造的UQD/UQDB通用快速连接器也将首次亮相, 支持全球范围内的大批量交付。

|